Posted by jonoalderson

Websites, like the businesses who operate them, are often deceptively complicated machines.

They’re fragile systems, and changing or replacing any one of the parts can easily affect (or even break) the whole setup — often in ways not immediately obvious to stakeholders or developers.

Even seemingly simple sites are often powered by complex technology, like content management systems, databases, and templating engines. There’s much more going on behind the scenes — technically and organizationally — than you can easily observe by crawling a site or viewing the source code.

When you change a website and remove or add elements, it’s not uncommon to introduce new errors, flaws, or faults.

That’s why I get extremely nervous whenever I hear a client or business announce that they’re intending to undergo a "site migration."

Chances are, and experience suggests, that something’s going to go wrong.

Migrations vary wildly in scope

As an SEO consultant and practitioner, I've been involved in more "site migrations" than I can remember or count — for charities, startups, international e-commerce sites, and even global household brands. Every one has been uniquely challenging and stressful.

In each case, the businesses involved have underestimated (and in some cases, increased) the complexity, the risk, and the details involved in successfully executing their "migration."

As a result, many of these projects negatively impacted performance and potential in ways that could have been easily avoided.

This isn’t a case of the scope of the "migration" being too big, but rather, a misalignment of understanding, objectives, methods, and priorities, resulting in stakeholders working on entirely different scopes.

The migrations I’ve experienced have varied from simple domain transfers to complete overhauls of server infrastructure, content management frameworks, templates, and pages — sometimes even scaling up to include the consolidation (or fragmentation) of multiple websites and brands.

In the minds of each organization, however, these have all been "migration" projects despite their significantly varying (and poorly defined) scopes. In each case, the definition and understanding of the word "migration" has varied wildly.

We suck at definitions

As an industry, we’re used to struggling with labels. We’re still not sure if we’re SEOs, inbound marketers, digital marketers, or just… marketers. The problem is that, when we speak to each other (and those outside of our industry), these words can carry different meaning and expectations.

Even amongst ourselves, a conversation between two digital marketers, analysts, or SEOs about their fields of expertise is likely to reveal that they have surprisingly different definitions of their roles, responsibilities, and remits. To them, words like "content" or "platform" might mean different things.

In the same way, "site migrations" vary wildly, in form, function, and execution — and when we discuss them, we’re not necessarily talking about the same thing. If we don’t clarify our meanings and have shared definitions, we risk misunderstandings, errors, or even offense.

Ambiguity creates risk

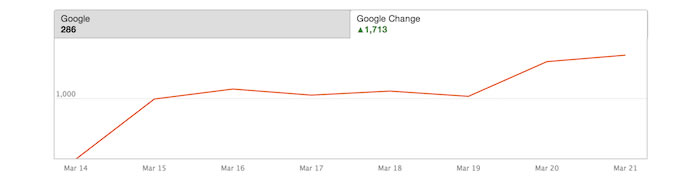

Poorly managed migrations can have a number of consequences beyond just drops in rankings, traffic, and performance. There are secondary impacts, too. They can also inadvertently:

- Provide a poor user experience (e.g., old URLs now 404, or error states are confusing to users, or a user reaches a page different from what they expected).

- Break or omit tracking and/or analytics implementations, resulting in loss of business intelligence.

- Limit the size, shape, or scalability of a site, resulting in static, stagnant, or inflexible templates and content (e.g., omitting the ability to add or edit pages, content, and/or sections in a CMS), and a site which struggles to compete as a result.

- Miss opportunities to benefit from what SEOs do best: blending an understanding of consumer demand and behavior, the market and competitors, and the brand in question to create more effective strategies, functionality and content.

- Create conflict between stakeholders, when we need to "hustle" at the last minute to retrofit our requirements into an already complex project (“I know it’s about to go live, but PLEASE can we add analytics conversion tracking?”) — often at the cost of our reputation.

- Waste future resource, where mistakes require that future resource is spent recouping equity resulting from faults or omissions in the process, rather than building on and enhancing performance.

I should point out that there’s nothing wrong with hustle in this case; that, in fact, begging, borrowing, and stealing can often be a viable solution in these kinds of scenarios. There’s been more than one occasion when, late at night before a site migration, I’ve averted disaster by literally begging developers to include template review processes, to implement redirects, or to stall deployments.

But this isn’t a sensible or sustainable or reliable way of working.

Mistakes will inevitably be made. Resources, favors, and patience are finite. Too much reliance on "hustle" from individuals (or multiple individuals) may in fact further widen the gap in understanding and scope, and positions the hustler as a single point of failure.

More importantly, hustle may only fix the symptoms, not the cause of these issues. That means that we remain stuck in a role as the disruptive outsiders who constantly squeeze in extra unscoped requirements at the eleventh hour.

Where things go wrong

If we’re to begin to address some of these challenges, we need to understand when, where, and why migration projects go wrong.

The root cause of all less-than-perfect migrations can be traced to at least one of the following scenarios:

- The migration project occurs without consultation.

- Consultation is sought too late in the process, and/or after the migration.

- There is insufficient planned resource/time/budget to add requirements (or processes)/make recommended changes to the brief.

- The scope is changed mid-project, without consultation, or in a way which de-prioritizes requirements.

- Requirements and/or recommended changes are axed at the eleventh hour (due to resource/time/budget limitations, or educational/political conflicts).

There’s a common theme in each of these cases. We’re not involved early enough in the process, or our opinions and priorities don’t carry sufficient weight to impact timelines and resources.

Chances are, these mistakes are rarely the product of spite or of intentional omission; rather, they’re born of gaps in the education and experience of the stakeholders and decision-makers involved.

We can address this, to a degree, by elevating ourselves to senior stakeholders in these kinds of projects, and by being consulted much earlier in the timeline.

Let’s be more specific

I think that it’s our responsibility to help the organizations we work for to avoid these mistakes. One of the easiest opportunities to do that is to make sure that we’re talking about the same thing, as early in the process as possible.

Otherwise, migrations will continue to go wrong, and we will continue to spend far too much of our collective time fixing broken links, recommending changes or improvements to templates, and holding together bruised-and-broken websites — all at the expense of doing meaningful, impactful work.

Perhaps we can begin to answer to some of these challenges by creating better definitions and helping to clarify exactly what’s involved in a "site migration" process.

Unfortunately, I suspect that we’re stuck with the word "migration," at least for now. It’s a term which is already widely used, which people think is a correct and appropriate definition. It’s unrealistic to try to change everybody else’s language when we’re already too late to the conversation.

Our next best opportunity to reduce ambiguity and risk is to codify the types of migration. This gives us a chance to prompt further exploration and better definitions.

For example, if we can say “This sounds like it’s actually a domain migration paired with a template migration,” we can steer the conversation a little and rely on a much better shared frame of reference.

If we can raise a challenge that, e.g., the "translation project" a different part of the business is working on is actually a whole bunch of interwoven migration types, then we can raise our concerns earlier and pursue more appropriate resource, budget, and authority (e.g., “This project actually consists of a series of migrations involving templates, content, and domains. Therefore, it’s imperative that we also consider X and Y as part of the project scope.”).

By persisting in labelling this way, stakeholders may gradually come to understand that, e.g., changing the design typically also involves changing the templates, and so the SEO folks should really be involved earlier in the process. By challenging the language, we can challenge the thinking.

Let’s codify migration types

I’ve identified at least seven distinct types of migration. Next time you encounter a "migration" project, you can investigate the proposed changes, map them back to these types, and flag any gaps in understanding, expectations, and resource.

You could argue that some of these aren’t strictly "migrations" in a technical sense (i.e., changing something isn’t the same as moving it), but grouping them this way is intentional.

Remember, our goal here isn’t to neatly categorize all of the requirements for any possible type of migration. There are plenty of resources, guides, and lists which already try do that.

Instead, we’re trying to provide neat, universal labels which help us (the SEO folks) and them (the business stakeholders) to have shared definitions and to remove unknown unknowns.

They’re a set of shared definitions which we can use to trigger early warning signals, and to help us better manage stakeholder expectations.

Feel free to suggest your own, to grow, shrink, combine, or bin any of these to fit your own experience and requirements!

1. Hosting migrations

A broad bundling of infrastructure, hardware, and server considerations (while these are each broad categories in their own right, it makes sense to bundle them together in this context).

If your migration project contains any of the following changes, you’re talking about a hosting migration, and you’ll need to explore the SEO implications (and development resource requirements) to make sure that changes to the underlying platform don’t impact front-end performance or visibility.

- You’re changing hosting provider.

- You’re changing, adding, or removing server locations.

- You’re altering the specifications of your physical (or virtual) servers (e.g., RAM, CPU, storage, hardware types, etc).

- You’re changing your server technology stack (e.g., moving from Apache to Nginx).*

- You’re implementing or removing load balancing, mirroring, or extra server environments.

- You’re implementing or altering caching systems (database, static page caches, varnish, object, memcached, etc).

- You’re altering the physical or server security protocols and features.**

- You’re changing, adding or removing CDNs.***

*Might overlap into a software migration if the changes affect the configuration or behavior of any front-end components (e.g., the CMS).

**Might overlap into other migrations, depending on how this manifests (e.g., template, software, domain).

***Might overlap into a domain migration if the CDN is presented as/on a distinct hostname (e.g., AWS), rather than invisibly (e.g., Cloudflare).

2. Software migrations

Unless your website is comprised of purely static HTML files, chances are that it’s running some kind of software to serve the right pages, behaviors, and content to users.

If your migration project contains any of the following changes, you’re talking about a software migration, and you’ll need to understand (and input into) how things like managing error codes, site functionality, and back-end behavior work.

- You’re changing CMS.

- You’re adding or removing plugins/modules/add-ons in your CMS.

- You’re upgrading or downgrading the CMS, or plugins/modules/addons (by a significant degree/major release) .

- You’re changing the language used to render the website (e.g., adopting Angular2 or NodeJS).

- You’re developing new functionality on the website (forms, processes, widgets, tools).

- You’re merging platforms; e.g., a blog which operated on a separate domain and system is being integrated into a single CMS.*

*Might overlap into a domain migration if you’re absorbing software which was previously located/accessed on a different domain.

3. Domain migrations

Domain migrations can be pleasantly straightforward if executed in isolation, but this is rarely the case. Changes to domains are often paired with (or the result of) other structural and functional changes.

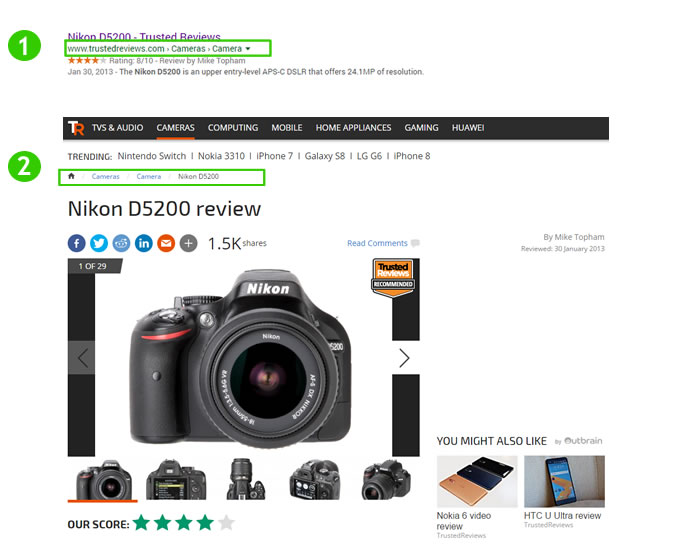

If your migration project alters the URL(s) by which users are able to reach your website, contains any of the following changes, then you’re talking about a domain migration, and you need to consider how redirects, protocols (e.g., HTTP/S), hostnames (e.g., www/non-www), and branding are impacted.

- You’re changing the main domain of your website.

- You’re buying/adding new domains to your ecosystem.

- You’re adding or removing subdomains (e.g., removing domain sharding following a migration to HTTP2).

- You’re moving a website, or part of a website, between domains (e.g., moving a blog on a subdomain into a subfolder, or vice-versa).

- You’re intentionally allowing an active domain to expire.

- You’re purchasing an expired/dropped domain.

4. Template migrations

Chances are that your website uses a number of HTML templates, which control the structure, layout, and peripheral content of your pages. The logic which controls how your content looks, feels, and behaves (as well as the behavior of hidden/meta elements like descriptions or canonical URLs) tends to live here.

If your migration project alters elements like your internal navigation (e.g., the header or footer), elements in your <head>, or otherwise changes the page structure around your content in the ways I’ve outlined, then you’re talking about a template migration. You’ll need to consider how users and search engines perceive and engage with your pages, how context, relevance, and authority flow through internal linking structures, and how well-structured your HTML (and JS/CSS) code is.

- You’re making changes to internal navigation.

- You’re changing the layout and structure of important pages/templates (e.g., homepage, product pages).

- You’re adding or removing template components (e.g., sidebars, interstitials).

- You’re changing elements in your <head> code, like title, canonical, or hreflang tags.

- You’re adding or removing specific templates (e.g., a template which shows all the blog posts by a specific author).

- You’re changing the URL pattern used by one or more templates.

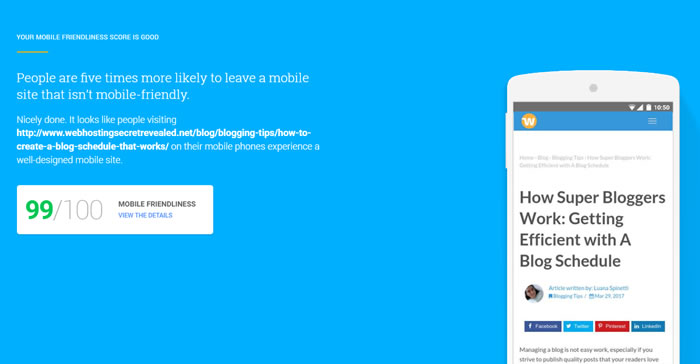

- You’re making changes to how device-specific rendering works*

*Might involve domain, software, and/or hosting migrations, depending on implementation mechanics.

5. Content migrations

Your content is everything which attracts, engages with, and convinces users that you’re the best brand to answer their questions and meet their needs. That includes the words you use to describe your products and services, the things you talk about on your blog, and every image and video you produce or use.

If your migration project significantly changes the tone (including language, demographic targeting, etc), format, or quantity/quality of your content in the ways I’ve outlined, then you’re talking about a content migration. You’ll need to consider the needs of your market and audience, and how the words and media on your website answer to that — and how well it does so in comparison with your competitors.

- You significantly increase or reduce the number of pages on your website.

- You significantly change the tone, targeting, or focus of your content.

- You begin to produce content on/about a new topic.

- You translate and/or internationalize your content.*

- You change the categorization, tagging, or other classification system on your blog or product content.**

- You use tools like canonical tags, meta robots indexation directives, or robots.txt files to control how search engines (and other bots) access and attribute value to a content piece (individually or at scale).

*Might involve domain, software and/or hosting, and template migrations, depending on implementation mechanics.

**May overlap into a template migration if the layout and/or URL structure changes as a result.

6. Design migrations

The look and feel of your website doesn’t necessarily directly impact your performance (though user signals like engagement and trust certainly do). However, simple changes to design components can often have unintended knock-on effects and consequences.

If your migration project contains any of the following changes, you’re talking about a design migration, and you’ll need to clarify whether changes are purely cosmetic or whether they go deeper and impact other areas.

- You’re changing the look and feel of key pages (like your homepage).*

- You’re adding or removing interaction layers, e.g. conditionally hiding content based on device or state.*

- You’re making design/creative changes which change the HTML (as opposed to just images or CSS files) of specific elements.*

- You’re changing key messaging, like logos and brand slogans.

- You’re altering the look and feel to react to changing strategies or monetization models (e.g., introducing space for ads in a sidebar, or removing ads in favor of using interstitial popups/states).

- You’re changing images and media.**

*All template migrations.

**Don’t forget to 301 redirect these, unless you’re replacing like-for-like filenames (which isn’t always best practice if you wish to invalidate local or remote caches).

7. Strategy migrations

A change in organizational or marketing strategy might not directly impact the website, but a widening gap between a brand’s audience, objectives, and platform can have a significant impact on performance.

If your market or audience (or your understanding of it) changes significantly, or if your mission, your reputation, or the way in which you describe your products/services/purpose changes, then you’re talking about a strategy migration. You’ll need to consider how you structure your website, how you target your audiences, how you write content, and how you campaign (all of which might trigger a set of new migration projects!).

- You change the company mission statement.

- You change the website’s key objectives, goals, or metrics.

- You enter a new marketplace (or leave one).

- Your channel focus (and/or your audience’s) changes significantly.

- A competitor disrupts the market and/or takes a significant amount of your market share.

- Responsibility for the website/its performance/SEO/digital changes.

- You appoint a new agency or team responsible for the website’s performance.

- Senior/C-level stakeholders leave or join.

- Changes in legal frameworks (e.g. privacy compliance or new/changing content restrictions in prescriptive sectors) constrain your publishing/content capabilities.

Let’s get in earlier

Armed with better definitions, we can begin to force a more considered conversation around what a "migration" project actually involves. We can use a shared language and ensure that stakeholders understand the risks and opportunities of the changes they intend to make.

Unfortunately, however, we don’t always hear about proposed changes until they’ve already been decided and signed off.

People don’t know that they need to tell us that they’re changing domain, templates, hosting, etc. So it’s often too late when — or if — we finally get involved. Decisions have already been made before they trickle down into our awareness.

That’s still a problem.

By the time you’re aware of a project, it’s usually too late to impact it.

While our new-and-improved definitions are a great starting place to catch risks as you encounter them, avoiding those risks altogether requires us to develop a much better understanding of how, where, and when migrations are planned, managed, and start to go wrong.

Let’s identify trigger points

I’ve identified four common scenarios which lead to organizations deciding to undergo a migration project.

If you can keep your ears to the ground and spot these types of events unfolding, you have an opportunity to give yourself permission to insert yourself into the conversation, and to interrogate to find out exactly which types of migrations might be looming.

It’s worth finding ways to get added to deployment lists and notifications, internal project management tools, and other systems so that you can look for early warning signs (without creating unnecessary overhead and comms processes).

1. Mergers, acquisitions, and closures

When brands are bought, sold, or merged, this almost universally triggers changes to their websites. These requirements are often dictated from on-high, and there’s limited (or no) opportunity to impact the brief.

Migration strategies in these situations are rarely comfortable, and almost always defensive by nature (focusing on minimizing impact/cost rather than capitalizing upon opportunity).

Typically, these kinds of scenarios manifest in a small number of ways:

- The "parent" brand absorbs the website of the purchased brand into their own website; either by "bolting it on" to their existing architecture, moving it to a subdomain/folder, or by distributing salvageable content throughout their existing site and killing the old one (often triggering most, if not every type of migration).

- The purchased brand website remains where it is, but undergoes a design migration and possibly template migrations to align it with the parent brand.

- A brand website is retired and redirected (a domain migration).

2. Rebrands

All sorts of pressures and opportunities lead to rebranding activity. Pressures to remain relevant, to reposition within marketplaces, or change how the brand represents itself can trigger migration requirements — though these activities are often led by brand and creative teams who don’t necessarily understand the implications.

Often, the outcome of branding processes and initiatives creates new a or alternate understanding of markets and consumers, and/or creates new guidelines/collateral/creative which must be reflected on the website(s). Typically, this can result in:

- Changes to core/target audiences, and the content or language/phrasing used to communicate with them (strategy and content migrations -—more if this involves, for example, opening up to international audiences).

- New collateral, replacing or adding to existing media, content, and messaging (content and design migrations).

- Changes to website structure and domain names (template and domain migrations) to align to new branding requirements.

3. C-level vision

It’s not uncommon for senior stakeholders to decide that the strategy to save a struggling business, to grow into new markets, or to make their mark on an organization is to launch a brand-new, shiny website.

These kinds of decisions often involve a scorched-earth approach, tearing down the work of their predecessors or of previously under-performing strategies. And the more senior the decision-maker, the less likely they’ll understand the implications of their decisions.

In these kinds of scenarios, your best opportunity to avert disaster is to watch for warning signs and to make yourself heard before it’s too late. In particular, you can watch out for:

- Senior stakeholders with marketing, IT, or C-level responsibilities joining, leaving, or being replaced (in particular if in relation to poor performance).

- Boards of directors, investors, or similar pressuring web/digital teams for unrealistic performance goals (based on current performance/constraints).

- Gradual reduction in budget and resource for day-to-day management and improvements to the website (as a likely prelude to a big strategy migration).

- New agencies being brought on board to optimize website performance, who’re hindered by the current framework/constraints.

- The adoption of new martech and marketing automation software.*

*Integrations of solutions like SalesForce, Marketo, and similar sometimes rely on utilizing proxied subdomains, embedded forms/content, and other mechanics which will need careful consideration as part of a template migration.

4. Technical or financial necessity

The current website is in such a poor, restrictive, or cost-ineffective condition that it makes it impossible to adopt new-and-required improvements (such as compliance with new standards, an integration of new martech stacks, changes following a brand purchase/merger, etc).

Generally, like the kinds of C-level “new website” initiatives I’ve outlined above, these result in scorched earth solutions.

Particularly frustrating, these are the kinds of migration projects which you yourself may well argue and fight for, for years on end, only to then find that they’ve been scoped (and maybe even begun or completed) without your input or awareness.

Here are some danger signs to watch out for which might mean that your migration project is imminent (or, at least, definitely required):

- Licensing costs for parts or the whole platform become cost-prohibitive (e.g., enterprise CMS platforms, user seats, developer training, etc).

- The software or hardware skill set required to maintain the site becomes rarer or more expensive (e.g., outdated technologies).

- Minor-but-urgent technical changes take more than six months to implement.

- New technical implementations/integrations are agreed upon in principle, budgeted for, but not implemented.

- The technical backlog of tasks grows faster than it shrinks as it fills with breakages and fixes rather than new features, initiatives, and improvements.

- The website ecosystem doesn’t support the organization’s ways of working (e.g., the organization adopts agile methodologies, but the website only supports waterfall-style codebase releases).

- Key technology which underpins the site is being deprecated, and there’s no easy upgrade path.*

*Will likely trigger hosting or software migrations.

Let’s not count on this

While this kind of labelling undoubtedly goes some way to helping us spot and better manage migrations, it’s far from a perfect or complete system.

In fact, I suspect it may be far too ambitious, and unrealistic in its aspiration. Accessing conversations early enough — and being listened to and empowered in those conversations — relies on the goodwill and openness of companies who aren’t always completely bought into or enamored with SEO.

This will only work in an organization which is open to this kind of thinking and internal challenging — and chances are, they’re not the kinds of organizations who are routinely breaking their websites. The very people who need our help and this kind of system are fundamentally unsuited to receive it.

I suspect, then, it might be impossible in many cases to make the kinds of changes required to shift behaviors and catch these problems earlier. In most organizations, at least.

Avoiding disasters resulting from ambiguous migration projects relies heavily on broad education. Everything else aside, people tend to change companies faster than you can build deep enough tribal knowledge.

That doesn’t mean that the structure isn’t still valuable, however. The types of changes and triggers I’ve outlined can still be used as alarm bells and direction for your own use.

Let’s get real

If you can’t effectively educate stakeholders on the complexities and impact of them making changes to their website, there are more "lightweight" solutions.

At the very least, you can turn these kinds of items (and expand with your own, and in more detail) into simple lists which can be printed off, laminated, and stuck to a wall. At the very least, perhaps you'll remind somebody to pick up the phone to the SEO team when they recognize an issue.

In a more pragmatic world, stakeholders don’t necessarily have to understand the nuance or the detail if they at least understand that they’re meant to ask for help when they’re changing domain, for example, or adding new templates to their website.

Whilst this doesn’t solve the underlying problems, it does provide a mechanism through which the damage can be systematically avoided or limited. You can identify problems earlier and be part of the conversation.

If it’s still too late and things do go wrong, you'll have something you can point to and say “I told you so,” or, more constructively perhaps, “Here’s the resource you need to avoid this happening next time.”

And in your moment of self-righteous vindication, having successfully made it through this post and now armed to save your company from a botched migration project, you can migrate over to the bar. Good work, you.

Thanks to…

This turned into a monster of a post, and its scope meant that it almost never made it to print. Thanks to a few folks in particular for helping me to shape, form, and ship it. In particular:

- Hannah Thorpe, for help in exploring and structuring the initial concept.

- Greg Mitchell, for a heavy dose of pragmatism in the conclusion.

- Gerry White, for some insightful additions and the removal of dozens of typos.

- Sam Simpson for putting up with me spending hours rambling and ranting at her about failed site migrations.

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!

Source:

Moz Blog